Why UX Research Cheat Sheets Matter?

We answered that question in the first article of our UX Research Cheat Sheet series and that can be found on this Cheatsheets for UX research! Why?

In the second article of this series, we shared the Cheatsheet for preparing and conducting user interviews. That is a useful piece of information to keep handy. Read it Cheatsheets for User interviews

In this cheatsheet, the third of the series, we are taking up the topic of Unmoderated Usability testing.

To build some context, Usability testing is an evaluative user research that enables the key stakeholders and project team to understand how people interact with a product. The usability testing can be performed during the design, development or post the release of the product. In a usability test, during the product usage, participants are required to perform certain tasks using the product and provide their feedback.

Research methods used in usability testing can be remote or in-person. The types are:

Ready to build that trust and kickstart your research?

let’s make trust the foundation of every project you work on.

Types of Unmoderated Usability Testing

To demystify the term “unmoderated” and its association with usability testing, it means research participants use the product when no one is watching or interacting with them.

Two Types of Unmoderated Usability Testing explained

a) In-person unmoderated usability testing

The participants use the product at a predetermined physical location. No one watches the participants during the time they use the product. The interaction and any comments made during the product usage may be recorded and probed by the researcher after the usage session is complete. Since this method of usability testing requires the presence of researchers and participants at a fixed location and time (read logistics), this method is less popular compared to remote unmoderated usability testing.

b) Remote unmoderated usability testing

This method is conducted using an Internet (SaaS) based user research platform. In this method, the participants and the researcher need not be at one location and at any specific time. They may be in any part of the world where access to an internet connection is possible. The participants perform the tasks and during product usage, their interactions are recorded and uploaded to a server. Later at a time of their choice, the researchers access the screen recordings of the interactions and interpret the feedback from the participants. This feedback includes verbal think-aloud comments and may include face recording.

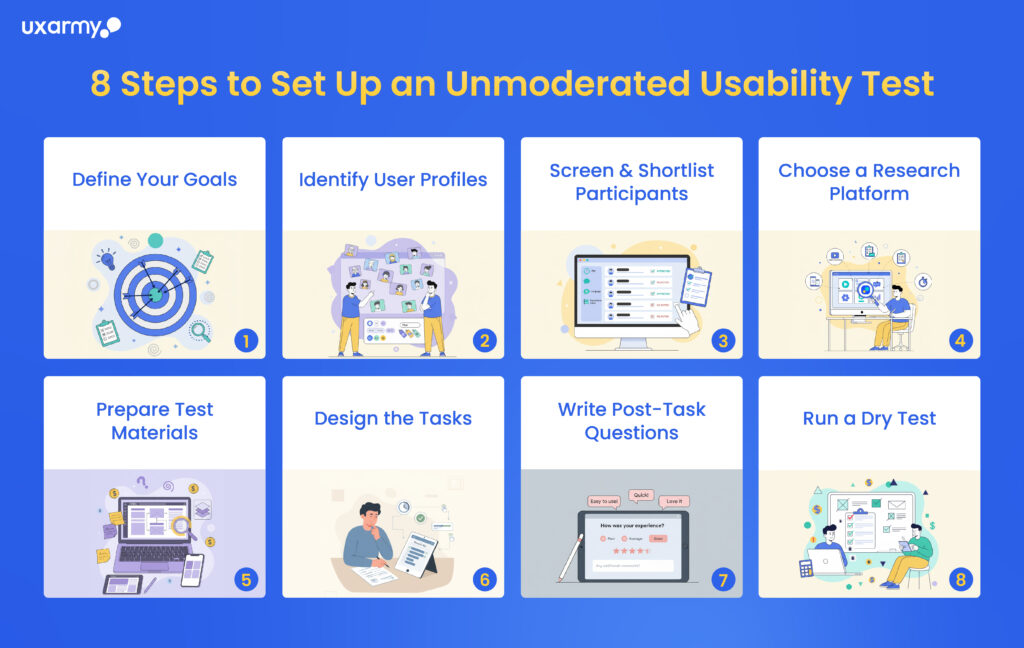

How to Set-up Unmoderated Usability Testing

Following preparation is necessary in order to successfully conduct unmoderated remote usability testing.

i) Establish the usability testing goals

ii) Identify profile of participants

iii) Screen and shortlist participants

iv) Select a User Research platform

v) Prepare Test materials

vi) Prepare Tasks to be performed and the list of usability metrics

vii) Prepare questions to be asked after the tasks are completed

viii) Do a test run prior to test launch

The above steps are detailed out for reference.

i) Establish the goals of usability testing

To establish the goals, follow these quickies:

- What do stakeholders want to find out

- How would the findings be valuable to the business

- Is the research method suitable for all the goals

- Do all stakeholders have a shared understanding of the goal statements

- Which of the goals can be included in the subsequent rounds of usability testing

ii) Identify profile of participants

- Number of profiles / Personas being targeted

- Demographics of the target audience e.g. age group, gender, nationality, income

- Behavioral attributes of relevance e.g. what they do, like, dislike

- Number of participants in each profile aka Sample size

iii) Screen and shortlist participants

- Prepare a list of Screener questions to shortlist participants

- Identify the answers which should qualify / disqualify the candidates from participation

- Comfort level in think-aloud

- Include a question to check the capability in articulation of feedback

- Gather contact details to distribute the usability test and reach out if necessary

- Ask for consent of participants to participate and agree to get recorded

- Ask for consent of participants to agree in storing their personal information, if any

- Include incentive type / amount and method of payment

iv) Select a User Research platform

- Select a remote usability testing platform based on what you want to collect and what participants would be testing.

- Does the platform support the research method e.g. Task based test, Card Sorting, Tree Test, 5-second test

- Does the platform provide participant recruitment

- Does the platform support testing of test material e.g. LIVE Mobile apps

- Does the platform support the devices the test must be conducted on e.g. Computer or Mobile device

- Is the platform Video-centric e.g. usertesting.com, UserZoom, UXArmy, etc.

- Is the platform Usability Metrics-centric e.g. Maze, Useberry, UsabilityHub, etc.

v) Prepare Test materials

- Find out and decide what you want the participants to test, options being:

- Wireframes using tools like Balsamiq, Figma, Sketch

- Design prototypes using Figma, MarvelApp, Proto.io

- Mobile websites – Staging or LIVE

- Mobile apps – Staging or LIVE

- A non-disclosure agreement to be signed by the participants, if necessary

- Usability testing Plan must include the availability of Test materials as a dependency

vi) Prepare Tasks to be performed and the list of usability metrics

- List the tasks the participants would perform. Shape up the tasks based on what users would accomplish by using the product.

- Keep to 5 or 7 tasks

- Each task must map to the goals of the study

- Tasks must be clearly worded and unambiguous

- Clear success criteria

- Each task must mention an end state for participant to know that task has been completed

- Task flow i.e. the sequence in which tasks are to be presented

- Identify usability metrics for each task

- Success / Fail

- Time to complete the task

- Time to first click / tap

- Number of clicks/taps

- Number of swipes

- Navigation paths e.g. path and number of pages / screens

- Number of Retries for a task

vii) Prepare questions to be asked

- survey questions can be asked at the end of all tasks and also immediately after performing any task

- survey questions can be of various types:

- Open question

- Single or multiple selection

- 5-Point / 7-Point Likert scale

- Dropdown

- Matrix

- Ranking

- Ask questions for recall, task difficulty and also to collect any additional information relevant to providing background information about participants e.g. other similar experiences they have had

- Some researchers include standard questionnaires like SUS, USERindex, UEQ, etc.

viii) Do a test run prior to test launch

- Also known as Dry-run, experiencing the test yourself helps to refine it.

- Dry-run with internal and external participants

- Measure if there is fatigue factor in completing the Test

- Check if all is working as expected e,g, prototypes are loading properly and are the correct ones

- Finetune questions and include things which might have got missed

- If all is good, launch the Test

This is the third of the series of Cheatsheets for User researchers. Throughout the year, we’d continue to develop more cheat sheets and infographics for User researchers and we hope this would come handy in your work.

Experience the power of UXArmy

Join countless professionals in simplifying your user research process and delivering results that matter

Frequently asked questions

What metrics matter in remote usability testing?

Use success rate, time to complete task, number of clicks/taps/swipes, navigation path length, retries, and subjective measures via user surveys or Likert scales. Tools like usability testing software or remote usability testing software often provide these out of the box.

How many tasks should I include in an unmoderated test?

Aim for 5-7 tasks per session. Each should align with testing goals. More tasks risk fatigue and lower quality feedback. Good practice in usability tools guidance.

What participant profile should I target?

Define personas or target audience by demographics and behavior. Use screeners to filter. Include enough variety if testing different concepts. Tools like user research platforms help with recruitment.

Which platforms are best for remote unmoderated testing?

Platforms that balance screen & voice recording, support live or prototype apps, and provide analytics are ideal. Examples: UXArmy, Maze, Useberry. Think about feature support: mobile app tests, video-centric, metrics dashboards.

How do I avoid common pitfalls in unmoderated tests?

Write clear, unambiguous tasks.

Do a dry-run to catch staging or prototype glitches.

Ensure recordings work, participants understand “think-aloud” instructions.

Keep test materials lightweight to avoid confusion.

Can unmoderated testing replace in-person moderated sessions?

Not fully. Unmoderated tests excel for broader, cheaper feedback and early validation. But moderated or in-person testing help uncover deeper insights, observe body language, and clarify ambiguous feedback.