Choosing the perfect outfit for a special occasion often involves trying on different options. You try one, then the other, maybe even ask a friend for their opinion.

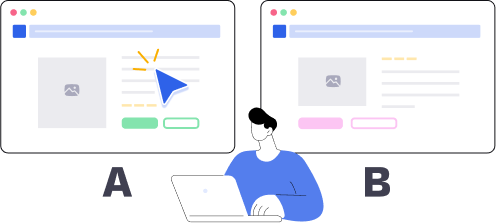

This simple process mirrors A/B testing in UX design.

A/B testing in its simplest form is experimenting to find what works best! A/B testing helps you decide which website design, navigation, or user interaction is most effective.

Instead of relying on assumptions, you gather real user data to make informed choices.

Looking to make your website both stunning and user-friendly? You’ve come to the right spot! In this guide, we’ll take you on a step-by-step journey to mastering A/B testing.

Whether you’re just starting or looking to fine-tune your website, we’ll show you how to plan your experiments, track user behavior, and make decisions that elevate the user experience.

let’s make trust the foundation of every project you work on.Ready to build that trust and kickstart your research?

What is A/B Testing & Why do you need it?

A/B testing is a way to compare two different versions of a button, a webpage, or a layout to see which works better for users.

Let’s say you’re trying to decide which CTA button works best on your website. Should it say “Get Started” or “Join Now”? Should it be blue or green?

A/B testing is a powerful way to compare two different versions of a button, webpage, or layout to determine which one performs better for your users.

Version A could have a blue button that says “Get Started.”

Version B might feature a green button with “Join Now.”

Each version is shown to a separate group of users, and their behavior such as clicks, conversions, or time spent on the page helps you identify which version is more effective.

By showing each version to different groups of people, you can see which one gets more clicks or leads to more sales. It helps you make smarter, data-based decisions to improve user experience, boost engagement, or increase conversions.

With A/B testing, you can:

- Avoid costly redesigns: Test small changes before committing to a full redesign.

- Spot problems early: Identify usability or design issues before they affect users.

- Boost results: Increase clicks, sign-ups, or other key actions.

- Make confident decisions: Base your design or product choices on real user data.

Netflix famously uses A/B testing to optimize its content thumbnails. By experimenting with different artwork for shows, they discovered that even small changes, like emphasizing a character’s face, could lead to significant increases in views. By A/B testing changes throughout the product and tracking users over time, you can see whether your change improves retention or increases revenue.

You can A/B test almost anything, including:

- The text and color of call-to-action (CTA) buttons.

- Layout changes, like moving menus or images.

- Adjusting content wording or images to better match user expectations.

- Improving navigation flow to make the user journey smoother.

A/B testing is a reliable way to test ideas, improve user experience, and drive results. By experimenting with small changes, you can continuously improve your product or service with minimal risk.

How A/B Testing Works: A Step-by-Step Breakdown

A/B testing involves comparing two versions of a design, feature, or piece of content to see which one performs better. Here’s how you can systematically run an A/B test:

- Set a Goal : Define what you want to achieve with the test. This could be increasing clicks on a button, boosting sign-ups, or improving time spent on a page.Example: You want to increase the percentage of users clicking the “Subscribe” button on your blog.

- Identify What to Test : Choose one specific element to test. It could be a headline, a call-to-action button, an image, or a page layout. Testing one element at a time ensures you know exactly what caused any changes in user behavior.Common elements to test include:

- Call-to-action text or buttons (e.g., “Sign Up” vs. “Get Started”).

- Page layout (e.g., two-column layout vs. single-column layout).

- Images or headlines (e.g., using a product photo vs. a lifestyle image).

Example: You decide to test two versions of your homepage headline. Version A says, “Affordable Fitness Plans,” and Version B says, “Fitness Plans Tailored to You.”

- Create Variations : Develop two versions of your test goal. You can create the following:

- Control: The current version of the element or page (Version A).Variation: The updated or alternative version you want to test (Version B).

For example: If your goal is to test ‘Call-to-Action’ CTA, your control button might have a red button, while the variation has a green button. Creating variations to test which ones work better with the audience always helps in making informed design decisions.

- Split Your Audience : Use an A/B testing tool (like VWO or Optimizely) to randomly split your traffic. Half of your audience sees the control version (A), and the other half sees the variation (B).

Example: For your headline test, users visiting your homepage are evenly divided between Version A and Version B to assess what the audience felt worked better.

- Run the Test : Allow the test to run for a sufficient amount of time, ensuring enough users interact with both versions. This avoids skewed results due to small sample sizes or short testing periods.You may run the test for one week and gather data from 10,000 visitors to ensure accurate results.

- Measure the Results : Use analytics to track performance metrics like click-through rates, conversions, or time spent on a page. Compare the results from both versions to determine which performed better.Metrics could include:

- Click-through rates (e.g., percentage of users clicking the button).

- Conversion rates (e.g., percentage of users completing a purchase).

Example: At the end of the test, you may see that Version B’s headline led to 20% more users clicking the “Subscribe” button compared to Version A.

- Analyze and Decide : Based on the data, decide whether to implement the winning version or iterate further. If neither version performs significantly better, you may need to test a different element or hypothesis.Example: With a clear winner (Version B), you update your homepage with the better-performing headline.

Implement and Iterate

Once you’ve found a successful variation, roll it out to all users. However, don’t stop there—A/B testing is an ongoing process. Continuously test new ideas to keep improving.

When Should You Run A/B Tests?

A/B testing is a versatile tool, but knowing the right time to run a test is just as important as running it. From fine-tuning live features to validating prototypes, here’s when you should consider A/B testing.

- Post-Launch Tweaks: After launching a website, app, or feature, use A/B testing to improve performance. For example, test variations to reduce bounce rates, increase engagement, or optimize a checkout process.

- Pre-Launch Decisions: Test during the prototype or MVP stage to identify which design, feature, or messaging resonates most with your users. This ensures you start strong with data-backed decisions.

- After Identifying Problems: If analytics reveal pain points—like high drop-offs in a sales funnel or users abandoning forms—A/B testing can pinpoint solutions. For example, test shorter forms, different CTAs, or simplified navigation.

- Seasonal Campaigns or Updates: Rolling out holiday promotions or time-sensitive updates? Use A/B tests to find out which design or messaging drives higher engagement or conversions. For instance, test holiday-themed banners or limited-time offer designs.

- For Regular Optimization: A/B testing doesn’t have to be tied to major changes. Use it regularly to refine and improve your UX strategy incrementally, ensuring your product evolves with user preferences.

When Not to Test

While A/B testing is a powerful tool for decision-making, there are specific situations where running a test may not be effective or advisable. Here are the key scenarios to avoid.

- Insufficient Traffic: A/B testing requires a statistically significant sample size to deliver meaningful results. If your website or app doesn’t have enough traffic, it could take an impractically long time to gather reliable data, and the results may not be trustworthy.

- During Unusual Traffic Patterns: Running tests during major holidays, promotions, or any event that causes unusual user behavior can skew results. These anomalies may not represent the typical actions of your audience and could lead to misleading conclusions.

- Lack of a Clear Goal or Hypothesis: Testing without a specific objective or hypothesis leads to inconclusive results. Always define what you’re testing (e.g., button color, headline, layout) and why before starting an A/B test.

A/B testing is most effective when it’s purposeful and timed well. By aligning tests with specific goals, you can gain actionable insights and continually enhance your product or campaign performance.

Join countless professionals in simplifying your user research process and delivering results that matterExperience the power of UXArmy

What to Include in A/B Testing Scenarios?

An effective A/B test is built on a well-structured plan. Here are the essential elements to include in your A/B testing scenarios to ensure accurate, actionable insights.

Clear Hypothesis

- State exactly what you’re testing and what you expect to happen.

- Example: “We believe changing the ‘Sign Up’ button color from red to blue will increase clicks by 15%.”

- Why it matters: A hypothesis keeps your test focused and ensures you’re measuring meaningful changes.

Defined Metrics

- Decide how you’ll measure success. Choose KPIs like click-through rates, sign-ups, or time spent on a page.

- Example: For a homepage test, your goal might be to reduce the bounce rate or increase the number of clicks on a product category.

- Why it matters: Clear metrics allow you to understand whether the test met your goal.

Target Audience Segments

- Ensure the test reflects the behavior of your actual users.

- Example: If testing a new navigation feature for mobile users, target only users accessing your site from mobile devices.

- Why it matters: Testing with the right audience ensures the results are relevant and actionable.

One Variable at a Time

- Focus on one change to pinpoint its exact impact, such as button text, headline, or image placement.

- Example: Test changing “Learn More” to “Discover Your Options” on a landing page CTA.

- Why it matters: Testing multiple elements at once can create confusion and make it hard to identify what worked.

Control and Variation

- Set a baseline (Control) to compare against the change (Variation).

- Example: Control: Original homepage layout. Variation: A version with a larger product image and a revised headline.

- Why it matters: A Control provides a benchmark to measure the effectiveness of your changes.

Testing Tools and Platforms

- Use tools like UXArmy to set up your test and track results.

- Example: These tools can split traffic between the Control and Variation and analyze the performance of each version.

- Why it matters: Testing platforms automate traffic splits, data collection, and result analysis, saving time and effort.

Practical Scenarios for A/B Testing

A/B testing is versatile and can be applied to a variety of real-world use cases. Here’s an in-depth look at three common scenarios:

1. Testing Two Homepage Designs Before Launch

Objective:

Improve user engagement before launching a new product by ensuring the homepage design resonates with your target audience.

Setup:

- Control Version: A simple, static homepage featuring a single banner image with a generic call-to-action (e.g., “Learn More”).

- Variation Version: A dynamic homepage showcasing a carousel of sliding banners, each highlighting different product features or benefits.

Metrics to Track:

- Bounce Rate: Measure how many users leave the site without interacting.

- Time Spent on Page: Longer engagement often indicates user interest.

- Click-Through Rate (CTR): Track how many users click on the featured content.

Example:

A fashion brand preparing for a new collection launch could compare a static banner highlighting “Fall Collection 2024” to a dynamic carousel showcasing specific items like jackets, boots, and accessories.

Pro Tip: If you’re just getting started with A/B testing, check out our Visual Design Preference Testing template—it’s a quick and easy way to gather actionable feedback on your website or app designs!

2. Comparing Email Subject Lines to Increase Open Rates

Objective:

Encourage more users to open promotional emails, leading to increased engagement with your content or offers.

Setup:

- Control Version: Subject line: “Shop Our Biggest Sale Today!”

- Variation Version: Subject line: “Exclusive Deals Just for You—Today Only!”

Metrics to Track:

- Open Rate: The percentage of recipients who open the email.

- Click-Through Rate (CTR): How many users clicked on links within the email.

- Conversions: The number of users who completed a desired action, such as making a purchase.

Example:

An e-commerce platform could use this test during a mid-year sale to determine whether personalization in the subject line (“Just for You”) performs better than urgency-focused language (“Today Only”).

3. Adjusting Mobile Navigation Menus

Objective:

Enhance mobile browsing by simplifying navigation, making it easier for users to find what they need.

Setup:

- Control Version: A traditional hamburger menu with text links to all site sections.

- Variation Version: A sticky bottom navigation bar featuring icons with labels for frequently used pages, such as “Home,” “Shop,” “Cart,” and “Profile.”

Metrics to Track:

- Page Views: Assess if users visit more pages with the new navigation.

- Navigation Drop-Offs: Determine how often users abandon the menu.

- Session Duration: See if users spend more time browsing with the improved navigation.

Example:

A food delivery app could test a new sticky navigation bar highlighting core actions like “Search Restaurants” and “Track Order” to simplify the user journey during peak dinner hours.

Tips for Effective A/B Testing

Mastering A/B testing requires strategy and precision. These tips will help you design experiments that deliver clear insights and impactful results.

- Focus on High-Traffic Pages: Prioritize pages like your homepage, pricing page, or product pages to get faster, more impactful results.

- Run Tests Long Enough: Allow the test to run for at least 1-2 weeks or until you gather enough data for statistically significant results.

- Avoid Testing During Anomalous Periods: Unusual traffic patterns during holidays, flash sales, or major events can distort your results unless you’re testing for those specific situations.

Common Use Cases for A/B Testing

A/B testing is a powerful tool for optimizing your website, app, or marketing strategy. Let’s break down some of the most practical scenarios where it can deliver real impact:

1. Fine-Tuning Website Design

Testing your website’s structure, layout, or call-to-action buttons can have a big effect on user engagement.

- Example: Imagine a landing page for a fitness program. You could test two versions of the CTA button: one that says “Get Fit Now!” and another with “Start Your Free Trial.” The one that clicks with users better stays.

- Why it matters: Even small design tweaks like testing navigation menus or banner placement can improve the overall user journey.

2. Boosting E-Commerce Sales

Online stores can experiment with everything from product page layouts to checkout processes.

- Example: Test whether showing product images in a grid layout versus a list layout makes browsing easier. Similarly, test whether a single-page checkout leads to more conversions compared to multi-step checkout.

- Insight: E-commerce thrives on convenience, and A/B testing can help you design smoother shopping experiences.

3. Email Marketing Adjustments

A/B testing is essential for optimizing email campaigns.

- Example: Suppose you’re sending a promotional email for a new product. One version has the subject line “Don’t Miss Out on Our Latest Launch!” while the other says“Introducing [Product Name]: Limited-Time Offer.” Test both to see which drives more opens and clicks.

- Outcome: With clear results, you’ll know which tone or format resonates most with your audience.

4. Improving App UI/UX

For apps, A/B testing focuses on the user experience from onboarding to in-app actions.

- Example: A food delivery app could test two home screen layouts: one featuring popular restaurants and another emphasizing user-specific recommendations.

- Key takeaway: Tests like these help you understand how design impacts engagement and retention.

5. Pricing Experiments

Pricing and promotions can make or break conversions, and testing these strategies ensures you make informed decisions.

- Example: Test whether a limited-time discount (e.g., 20% off for 24 hours) works better than a bundled offer (e.g., “Buy One, Get One 50% Off”).

- Why it works: You’ll learn what pricing model appeals most to your audience.

6. Running Seasonal Campaigns

For time-sensitive promotions, testing placement, messaging, or visuals can maximize success.

- Example: Test two homepage banners for a holiday sale: one that uses urgency-focused language (“Ends Tonight!”) versus one that highlights discounts (“Save Up to 50%!”).

- Advantage: With the results, you can tailor future campaigns for greater impact.

A/B testing helps you uncover what resonates with your users and what doesn’t—whether it’s for boosting sales, increasing clicks, or refining user experiences. With the right scenarios and smart experimentation, you can drive measurable improvements and make confident decisions.

Top A/B Testing Tools to Try Today

A/B testing is essential for making data-driven decisions that help improve user experience and optimize digital performance. Below are some of the best tools for running A/B tests, with a focus on their A/B testing features and how they can help improve your website or app’s performance.

1.UXArmy

UXArmy is a user experience platform that combines A/B testing with usability insights, making it ideal for businesses that want to optimize websites, apps, or prototypes.

Testing Features:

- Easy-to-set-up A/B tests for design and usability tests.

- Provides both quantitative (click-through rates, conversion rates) and qualitative (user feedback, session recordings) insights.

- Offers tests on live websites, mobile apps, or design prototypes.

- In-depth demographic targeting to ensure test results are relevant to specific user groups.

Best for:

Teams looking for a comprehensive platform that combines A/B testing with user feedback and behavior insights.

2. Optimizely

Optimizely is one of the most powerful A/B testing platforms, catering to businesses that need advanced experimentation and optimization at scale.

Testing Features:

- Full-stack experimentation allows A/B testing on websites, mobile apps, and backend systems.

- Multivariate testing to compare multiple variations at once.

- Advanced targeting and segmentation for precise user testing.

- AI-driven insights to identify the best-performing variations automatically.

- Detailed real-time reports for immediate actionable insights.

Best for:

Large organizations needing a robust, scalable solution for complex A/B testing and personalization across multiple channels.

3. VWO (Visual Website Optimizer)

VWO is an easy-to-use A/B testing platform with a range of testing options and built-in analytics, ideal for marketers and designers who need a simple way to optimize their website or app.

Testing Features:

- A drag-and-drop visual editor for quick A/B test creation, no coding required.

- Multivariate and split URL testing capabilities.

- Advanced segmentation to target specific audiences or user groups.

- In-depth reports with heatmaps and session recordings for additional insights.

- Statistical analysis to determine the success of variations based on data.

Best for:

Marketers or small to medium-sized businesses looking for an intuitive platform to optimize user experience with A/B tests and behavior analysis.

4. AB Tasty

AB Tasty is a versatile A/B testing and personalization platform that makes it easy to experiment with digital experiences and enhance the customer journey.

Testing Features:

- Simple drag-and-drop editor for A/B test creation.

- Multivariate testing and real-time results monitoring.

- Advanced audience segmentation and targeting.

- Predictive analytics to identify winning variations faster.

- Integration with analytics tools and CRM systems for comprehensive data insights.

Best for:

Businesses of all sizes that want an easy-to-use A/B testing tool with strong segmentation and predictive analytics features.

5. Crazy Egg

Crazy Egg is a user-friendly optimization tool that combines heatmaps and A/B testing to provide deeper insights into user behavior and site performance.

Testing Features:

- Basic A/B testing features to test website variations.

- Heatmaps and scroll maps to understand user behavior visually.

- User recordings and session analysis to identify pain points.

- A simple visual editor to create variations without needing coding skills.

- Easy integration with CMS platforms like WordPress and Shopify.

Best for:

Small businesses and website owners looking for a low-cost, visual approach to A/B testing and improving user engagement.

6. Adobe Target

Adobe Target is a powerful A/B testing and personalization tool designed for enterprises looking for AI-driven insights and multi-channel experimentation.

Testing Features:

- Advanced A/B, multivariate, and split testing across websites, mobile apps, and email.

- Personalization features driven by AI to provide custom experiences for users.

- Real-time testing with performance tracking and automated insights.

- Seamless integration with other Adobe products like Adobe Analytics for deeper reporting.

- Targeting and segmentation capabilities for highly customized tests.

Best for:

Large enterprises with complex needs for multi-channel testing and personalization, especially those already using other Adobe products.

Examples of A/B Testing Done Right

Small changes can lead to significant results when done right. Here are proven examples of A/B testing that showcase how smart tweaks can improve user experience and drive success.

Booking.com – A/B Testing in the Tourism Sector

- Pioneering A/B Testing: Booking.com is a leader in A/B testing within the travel sector, consistently outperforming its competitors in eCommerce.

- Scale and Frequency: The company runs nearly a thousand A/B tests simultaneously, showcasing the scale at which they operate.

- Focus on Copy Testing: Booking.com excels in testing website copy, which plays a significant role in refining user engagement and driving revenue.

- Flywheel Effect: A/B testing is seen as a critical driver of revenue growth at Booking.com, continuously optimizing their website and services.

Amazon – Heavy on A/B Testing

- Customer Experience Optimization: Amazon uses A/B testing to improve the customer experience on its marketplace, constantly fine-tuning its interface.

- Example – Product Detail Page: In 2020, Amazon tested a new design for product detail pages to make user feedback more visible.

- Alexa and Voice Ads: Amazon used A/B testing for Alexa’s voice assistant, experimenting with ideal invitation lengths to drive subscriptions for paid voice ads.

- Continuous Improvement: A/B testing is a key tool for Amazon in adapting and refining its services to meet user expectations.

These examples show how both Booking.com and Amazon leverage A/B testing to optimize their platforms for better user experiences and business outcomes.

Optimize Your UX with A/B Testing Using UXArmy

Are you looking to make data-driven decisions to improve your website or app’s user experience? UXArmy offers an intuitive platform to easily run A/B tests and gather actionable insights from real users. By testing different versions of your designs, you can make informed decisions that enhance engagement, reduce bounce rates, and boost conversions.

Ready to Start Testing?

Take the first step in refining your user experience with UXArmy. Sign up today and start testing your designs with real users!

Sign up for UXArmy now.

Experience the power of UXArmy

Join countless professionals in simplifying your user research process and delivering results that matter

Frequently asked questions

What is A/B testing in UX design?

A/B testing in UX compares two versions of a design element (like a CTA button or layout) to see which performs better with users. It helps improve usability, boost engagement, and increase conversions based on real data instead of assumptions.

How does A/B testing differ from usability testing?

A/B testing measures performance between two versions (quantitative), while usability testing focuses on observing user behavior and feedback (qualitative). Many teams combine both using usability testing software or platforms like UXArmy.

What are the best A/B testing tools in 2025?

Some of the best A/B testing platforms include UXArmy (A/B + usability testing in one), Optimizely, VWO, AB Tasty, and Crazy Egg. Each offers features like split testing, heatmaps, and analytics for better UX decisions.

Can A/B testing be done on prototypes?

Yes! Tools like UXArmy, Maze, and Optimal Workshop allow you to A/B test prototypes using methods like card sorting, tree testing, or preference testing before full development.u003cbru003e

Is A/B testing part of generative or evaluative research?

A/B testing is primarily evaluative research—it helps validate which design works better. Generative research, on the other hand, uncovers user needs and pain points before design.

How does tree testing compare to A/B testing?

Tree testing checks navigation structure and information architecture, while A/B testing compares design variations. Many UX research platforms (like UXArmy) support both tree testing and A/B testing.

Are there free A/B testing and usability tools?

Yes—tools like Google Optimize (basic A/B), UXArmy free plan (2 responses), Maze free tier, and some card sorting tools (like XSort or OptimalSort free version) offer limited free testing.

What metrics should I track in A/B tests?

Common A/B test metrics include click-through rate (CTR), conversion rate, time on page, bounce rate, and task completion rates. These align with broader usability analysis tools and UX survey insights.

How does A/B testing fit into a UX research process?

A/B testing is often used after initial research (personas, surveys, user interviews) to validate specific design decisions. It complements methods like card sorting, tree testing, and usability surveys.