The UX research field is constantly evolving, driven by advances in technology, changing user behaviors, and the growing demand for rapid product iteration. One of the most recent and controversial innovations is the use of synthetic participants – AI-generated personas designed to simulate real users in usability testing scenarios. But are these avatars a revolutionary solution for modern research or just another overhyped trend? In this context, the term Synthetic Participants in UX Research has become increasingly relevant.

As product teams and researchers search for efficient user testing platforms, usability testing tools, and UX research software, synthetic participants present a new, intriguing proposition. In this blog, we’ll explore what synthetic participants really are, their strengths and weaknesses, how they compare to real users, and whether they represent a meaningful shift in the future of remote usability testing.

What Are Synthetic Participants?

Synthetic participants are artificially generated user personas created using AI models trained on large datasets of user behavior, demographics, psychographics, and interaction patterns. These virtual users can be deployed to test digital products – ranging from websites to mobile apps – without needing to recruit human participants.

Understanding the role of Synthetic Participants in UX Research is crucial for future advancements in the field.

They are designed to simulate typical user behaviors, decision-making processes, and even emotional reactions. Unlike traditional personas, which are static and manually created, synthetic participants are dynamic and interactive. Some are capable of navigating prototypes, giving feedback, or making purchasing decisions – all without a human behind the screen.

These synthetic personas are built by aggregating anonymized behavioral data and training machine learning algorithms to respond as a typical user might under certain conditions. Companies are beginning to include them as part of broader UX research tools to accelerate testing and reduce costs.

One notable company pioneering this space is Synthetic Users, which provides a platform specifically built to simulate user interaction scenarios using synthetic personas. Their technology allows researchers to deploy tests rapidly and gain predictive behavioral feedback – without live participants.

How Synthetic Participants Work

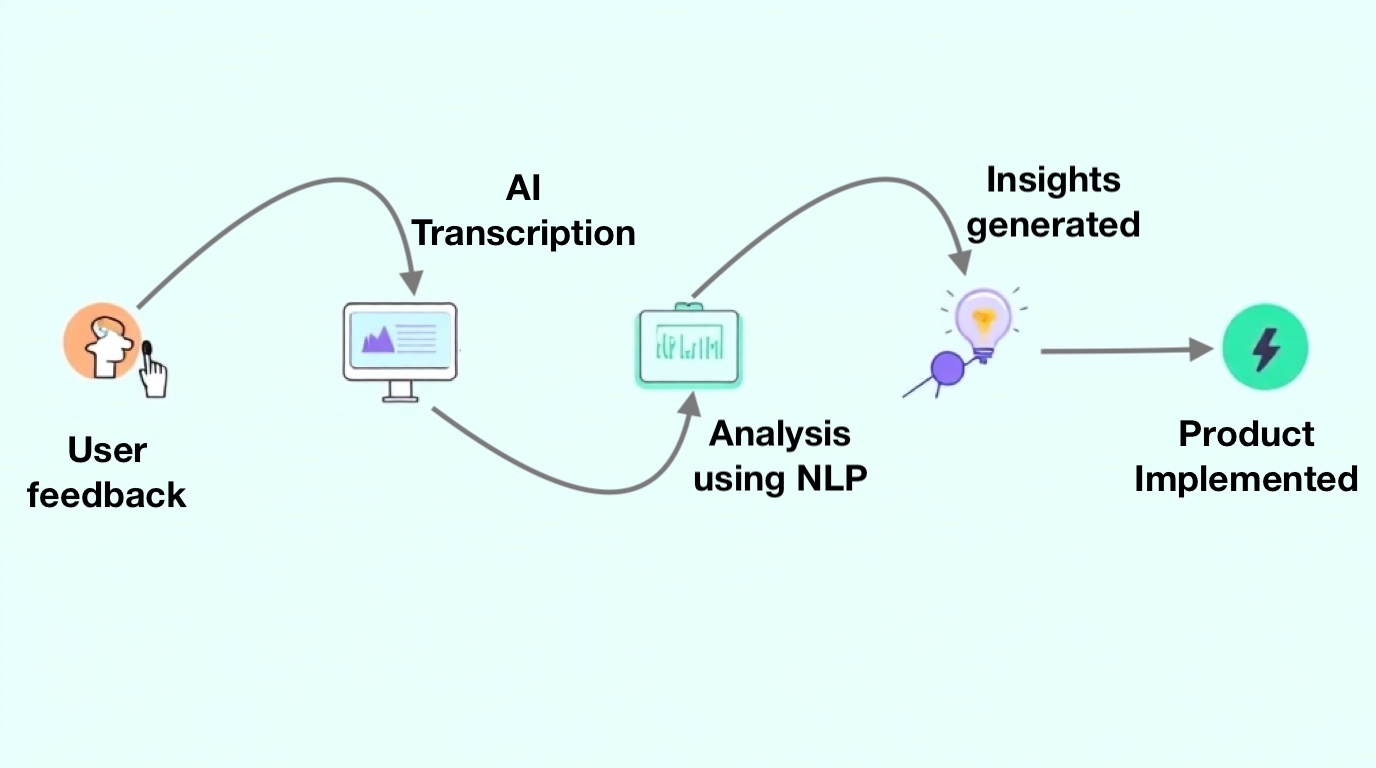

Synthetic participants operate on predictive modeling and behavioral simulation. Most systems use data from real user sessions – clickstreams, heatmaps, session recordings, and feedback – to train models that emulate user behavior.

These models are then applied to specific testing scenarios, such as navigating a checkout flow, completing a task in a SaaS dashboard, or interacting with a new mobile UI. The synthetic participant makes decisions and performs actions based on its simulated persona’s goals, motivations, and preferences.

In more advanced implementations, Natural Language Processing (NLP) enables these participants to provide open-text feedback that mimics human sentiment. This is especially useful for teams using remote user testing tools who want quick, iterative feedback loops without waiting for human participants.

Some platforms offer tunable variables for researchers to simulate edge cases, power users, or users with accessibility needs – allowing a broader range of testing without additional recruitment.

Benefits of Using Synthetic Participants

Speed and Availability

Synthetic participants are always available and can be deployed instantly, making them ideal for rapid testing cycles. This is especially valuable when you’re on tight deadlines or iterating frequently.

Cost Efficiency

Traditional user research can be expensive due to recruitment, compensation, and scheduling logistics. Synthetic participants remove these overheads entirely, offering a more affordable option for teams with limited budgets.

Scalable Testing

You can run hundreds of simulated tests simultaneously, enabling teams to evaluate numerous scenarios and variations in less time. This scalability is hard to match with real users, especially in global or enterprise-level testing.

Scenario Control

You can fine-tune synthetic personas to mimic specific demographic, behavioral, or accessibility profiles. This level of control can help validate edge cases and test inclusivity at scale.

Data-Rich Feedback

AI-generated participants can generate rich telemetry – such as time-on-task, error rates, click paths, and simulated frustrations – without bias or fatigue. Combined with usability testing platforms, this data becomes actionable quickly.

“Synthetic users offer a bridge between analytics and empathy – but you need to know how to interpret them.” – Sarah Doody, UX Strategist

Drawbacks and Limitations

Lack of True Human Emotion

While AI can simulate behavior, it can’t replicate authentic emotional responses. Nuances like confusion, delight, or frustration often require human interpretation and empathy to understand fully.

No Contextual Awareness

Synthetic participants lack context. They don’t bring lived experience, cultural nuance, or unexpected insights that real users provide. They act as models – not mirrors – of reality.

Risk of Misleading Data

If not properly validated, synthetic feedback can give a false sense of accuracy. Decisions based solely on these models risk overlooking key usability pain points or misrepresenting real-world diversity.

Limited Use in Exploratory Research

Synthetic participants are ideal for task-based, performance-driven testing – not for open-ended interviews or generative research, where exploratory dialogue is crucial.

Ethical and Trust Concerns

Clients and stakeholders may be skeptical of research that excludes real users. Clear communication and methodological transparency are essential when using synthetic data.

Ideal Use Cases for Synthetic Participants

- A/B Testing at Scale: Evaluate different design versions without multiple rounds of participant recruitment.

- Benchmarking Usability: Standardized tasks (e.g., form filling, onboarding flows) can be tested repeatedly to gauge changes over time.

- Prototype Stress Testing: Simulate edge cases and error conditions with aggressive user paths.

- Accessibility Simulations: Test how users with disabilities might experience your interface based on behavioral models.

- Early-stage Concept Validation: Get directional feedback before investing in high-fidelity designs or human recruitment.

Synthetic vs. Real Users

| Aspect | Synthetic Participants | Real Users |

| Availability | Instant, 24/7 | Scheduled |

| Cost | Low | High |

| Emotional Insight | Simulated | Genuine |

| Contextual Understanding | Limited | Deep |

| Exploratory Feedback | Weak | Strong |

| Speed | Fast | Moderate to Slow |

| Diversity Representation | Controlled (synthetic) | Authentic (organic) |

Synthetic participants don’t replace real users – they complement them. Use them to accelerate learning but validate key decisions with human insights.

Real-World Providers

SyntheticUsers.com

SyntheticUsers.com is among the first platforms purpose-built for UX teams looking to use synthetic participants in product testing. Their technology enables simulation-based testing that emulates how users interact with interfaces, bringing efficiency and predictive accuracy into design workflows.

UXArmy

At UXArmy, we’re actively exploring how synthetic participants can be used to complement traditional remote usability testing. For structured testing scenarios, synthetic users can reduce turnaround time and provide a foundational layer of insight before human validation.

Experience the power of UXArmy

Join countless professionals in simplifying your user research process and delivering results that matter

Frequently asked questions

What are synthetic participants in UX research?

Synthetic participants are AI-generated personas or agents used to simulate human behavior, responses, or feedback in user experience (UX) research. They are trained on real user data and behavioral models to mimic realistic interactions without requiring actual human subjects.

How are synthetic participants created?

Synthetic participants are created using large language models (LLMs), behavioral datasets, and UX domain-specific prompts. Some systems also use data from prior usability tests or user interviews to generate realistic user behavior patterns and responses.

What are the benefits of using synthetic participants?

The key benefits include:

1.Rapid scalability of testing sessions

2.Early-stage design feedback without recruitment delays

3.Cost-effective simulation of edge cases and personas

4.Safe testing of sensitive or high-risk scenarios

Are synthetic participants a replacement for real users?

No. Synthetic participants are best used as a complement to real users, especially in early-stage design or when testing feasibility. They help validate interface logic, navigation, and general usability but lack lived experience and emotional nuance.

What are the limitations of synthetic participants?

Synthetic agents can:

1.Lack authentic emotional feedback

2.Miss unpredictable edge-case behavior

3.Reflect bias if trained on skewed data

4.Create false confidence in unvalidated assumptions

Can synthetic participants be used in usability testing?

Yes, synthetic participants can be used to run early, unmoderated usability tests, especially for interface flows or content validation. However, insights must be verified with real user testing to ensure reliability.

Is it ethical to use synthetic participants in UX research?

Yes, when transparently communicated and used appropriately. They should not be presented as substitutes for real user voices. Ethical use involves disclosing their use in research reporting and understanding their limitations.