Qualitative Research in the age of AI

Faster isn’t automatically wiser!

Quant tells you what happened; qualitative user research tells you why. That distinction has always mattered, but it matters even more now that many products include AI that drafts emails, suggests routes, prices rides, or answers customer queries. People need to understand and trust those systems, and both understanding and trust are deeply human phenomena. If your team ships an AI-powered flow that feels opaque or out-of-control, your dashboards will light up later with support tickets and silent churn. Qual is how you catch that before it happens.

AI, of course, has changed how we do qual. You can transcribe in minutes, pull up multilingual summaries, cluster dozens of interviews overnight, and generate decent first-pass briefs tailored to different audiences. That’s real leverage. But it’s not a license to hand judgment to a model. Reputable guidance keeps repeating the same drumbeat: lean on AI for prep and first-pass analysis; be careful when AI comes between you and participants or when it tries to “conclude” without traceable evidence. Nielsen Norman Group’s evaluations and training materials are blunt on this point: never let AI do all your analysis, and don’t let tools substitute for the human contact that creates context and meaning.

There’s also a subtler risk: when automation gets good, we’re tempted to outsource the thinking. UXmatters calls this “cognitive offloading”-a habit that saves time but can dull the craft if you let it take over. In other words, AI can make you faster and less perceptive at the same time unless you build guardrails.

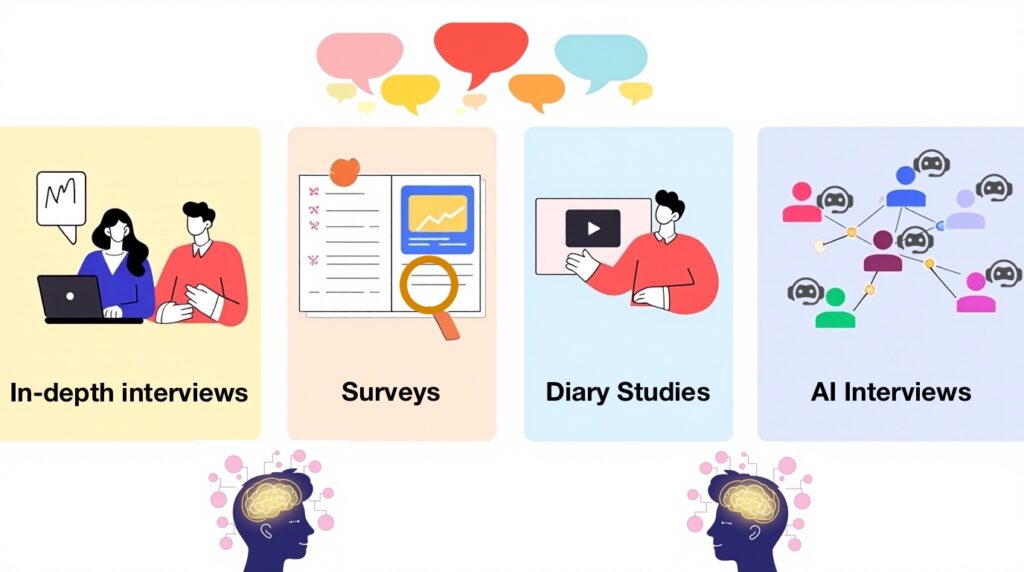

What follows is a practical guide for mid-to-senior UX researchers and designers. Each method starts with the proven, manual craft, then shows how to use AI as a co-pilot-without losing rigor, ethics, or taste.

Ready to build that trust and kickstart your research?

let’s make trust the foundation of every project you work on.

House rules for AI-assisted qualitative work

Traceability or it didn’t happen. If a theme can’t be traced to clips or verbatims, it’s not ready to steer a roadmap. Use AI to draft codes and clusters; insist on linking every claim to evidence you can replay in the room. NN/g’s guidance on “don’t rely on AI to do all your analysis” is really about preserving this chain of custody.

Privacy and policy first Treat participant data like production code: governed, reviewed, and auditable. Before you paste anything into a model, confirm the tool is approved and your process redacts PII as required. Vendor docs (e.g., Qualtrics’ AI administration) reflect how many organizations are formalizing these controls.

Human contact isn’t optional Teresa Torres’s reminder is worth taping above your desk: don’t use generative AI to replace discovery with real humans. It can draft guides, brainstorm probes, and summarize notes; it can’t build rapport or read the room for you.

Treat AI like a bright junior analyst Great at speed and recall, prone to overconfidence. Use it to widen options and compress drudgery; keep humans for contact, ethics, and decisions. UXmatters’ argument about avoiding cognitive shortcuts is a good north star here.

In-Depth Interviews (IDIs)

The time-tested craft (manual first)

Use IDIs when you need to understand motives, trade-offs, anxieties, and habits-especially for 0=>1 product bets, sense-making after a puzzling metric, or sensitive domains (health, finance, identity). Sampling should track recent behavior and context, not just titles: the last time they tried to switch accounts; the last incident where a smart feature “went rogue”; the last week they managed a task at home with kids underfoot. You’ll learn more from five carefully varied contexts than from fifteen lookalikes.

Write guides that elicit episodes Start with, “Walk me through the last time you…” instead of “Would you…?” Pyramid from story => concrete steps => feelings and workarounds. Prepare three rival probes for each topic so you can follow the participant’s language (“You said ‘mistrust’-what tipped you off?” vs. “Was it the copy?”).

Moderation is a performance of quiet Use silence; it buys you honesty. Label tension when you hear it: “You hesitated-what was going through your mind?” Mind the power dynamics in the room. If a caregiver or manager is present, acknowledge the influence and decide whether to continue, split, or reschedule. Remotely, start with a tiny warm-up task (“share one notification you dismissed today and why”) to loosen the tongue and catch accessibility issues before they sink your session.

Synthesis begins with open coding on a small seed set, then naming patterns only after you’ve seen contradictions. Carry a “rival explanations” column in your notes-what else could explain this? Stop interviews when signal stabilizes, not at a magic N. Tie every claim to a clip; executives trust what they can see.

Common failure modes leading questions, turning an interview into a demo, treating quotes like votes, and mistaking “I would” for evidence of “I did.”

Where AI adds genuine value

Before Ask a model to draft a first-pass guide from your problem frame, plus counter-probes you might be missing and a lightweight risk register (topics to tread carefully around: consent, safety, identity). The point isn’t to accept drafts blindly; it’s to start stronger and spend more time thinking than typing.

During Run diarization for clear speaker separation and time-stamped “moments” you can jump to later. If real-time prompts distract you from rapport, turn them off-presence beats gadgetry.

After Let AI handle transcripts, translation, candidate clusters, and audience-specific draft summaries. Then you do the deciding: merge, split, and discard until patterns are robust and traceable to evidence. This is exactly the balance NN/g encourages: heavier AI in prep and first-pass analysis; caution whenever a tool edges toward doing the human work for you.

In IDIs, UXArmy AI Summaries and AI auto-tagging can knock hours off the first synthesis pass. Use them to surface the “bones” of your analysis quickly, then do the human refinement-challenge, merge, and add context-before anything steers a roadmap.

AI-moderated interviews

Automated interviewers can be helpful in narrow, low-risk scopes-multilingual quick pulses, late-stage comprehension checks, or concept reactions where your tone and boundaries are already calibrated. Run them the way you’d onboard a new moderator: disclose clearly that an automated system will guide the session, install safety rails (approved prompts, banned topics, escalation to a human on distress or confusion), and do calibration pilots in parallel with human-moderated sessions. Track completion, depth per answer, topical coverage, “off-policy” follow-ups, and participant satisfaction; compare these to your human baseline before scaling. NN/g’s umbrella advice is caution whenever AI stands between you and a participant.

Vendor and practitioner write-ups showcase strengths and limits: always-on availability, consistent probing against a playbook, and multilingual reach, but brittle rapport and the occasional odd or leading follow-up. Take them as throughput tools, not replacements for strategic interviews, and keep a human in the loop.

“AI will never replace research with real users.” – Ellina Morits, Design Lead

“AI moderation offers scale and efficiency—but at the cost of human depth, contextual sensitivity, and nuanced interpretation.” – Craig Griffin, CIO (FuelAsia)

“Don’t let generative AI tools replace talking to real humans.” – Teresa Torres, Product discovery coach.

“This myopia is NOT something driven by a user need.” – Scott Jenson, ex-Google design leader.

“Being honest about using AI can actually make people trust you less.” – University of Arizona researchers summarizing multi-study results.

“Trust: how controllable, understandable, and transparent does it feel?” – Ammar Halabi, measuring AI experiences.

Contextual inquiry & field studies

Contextual work is where tacit knowledge lives: that checklist taped to a monitor, the policy everyone ignores, the workaround that keeps a fragile process running. Much of this never appears in an interview because people forget it’s unusual.

Manual first Observe before you interrupt. Ask participants to narrate a routine task without performing for you. Document constraints (devices, lighting, signing in, distractions), not just steps. Co-interpret artifacts before you leave: “Walk me through this sticky note like I’m new.”

Where AI helps On-device transcription makes noisy spaces workable; lightweight computer-vision can timestamp recurring objects or steps so you can jump back later; timeline summaries speed your write-ups. They’re drafts, not verdicts. Let AI do the filing; you do the seeing. NN/g’s advice-support existing practices, don’t supplant them-fits this method perfectly.

Diary studies & experience sampling

Products live in the seams of people’s days. Diary methods catch how trust forms (or erodes), how habits survive stress, and how context changes choices.

Manual first Mix prompted entries with free ones; recruit by journey state (first week, retained, churn-risk); do mid-study check-ins to correct drift and encourage quieter voices; incentivize consistency, not verbosity. Ask participants to attach artifacts when possible (screenshots, photos of a space, a snippet of a message) so entries don’t float free of reality.

Where AI helps Auto-classify entries by situation + emotion + outcome, then flag anomalies worth a call. Translate across markets so a central team can synthesize a global study in days. Use suggestions as triage; the meaning still comes from you.

Moderated task-based evaluations

Completion rates are table stakes. In AI-infused flows, two other questions matter just as much: What did they expect the system would do? and Where did consent or control feel murky? Those answers determine whether people trust your product tomorrow.

Manual first Start each session by asking what the participant thinks will happen when they press a button or accept a suggestion. During tasks, watch for hesitation, self-repairs (“oh-no, not that”), and reversals. Afterward, ask them to explain-briefly-what they believe the system did and why. That one sentence is a surprisingly sharp test of comprehension.

Where AI helps Auto-tag hesitation and repair moments across sessions; summarize recurring misinterpretations of prompts, disclaimers, or model outputs. Treat these as lead indicators of trust problems. Then you-or your writer-change the copy or the interaction and retest. NN/g’s “limitations” pieces emphasize the difference between compressing volume and reaching conclusions-keep that separation intact

Participatory design & co-creation

Co-creation is not “users decide for us.” It’s a structured way to surface values and trade-offs-what people gladly exchange, what they’ll never trade, and where power and context shape choices.

Manual first Design activities that elicit principles, not just pretty posters. Have participants storyboard their ideal day with and without your service; script role-plays around consent or control; run an “un-sales pitch” where they talk you out of adopting a feature. Moderate for equity: rotate speaking order, use silent sketching rounds, and close with a reflection on what felt empowering versus extractive.

Where AI helps Generate divergent stimuli-scenarios, microcopy variants, even rough UI sketches-to provoke discussion. After workshops, use clustering to tame the mountain of sticky-note photos and transcripts, then resynthesize with the team so nuance isn’t flattened by tidy categories.

Focus groups

Focus groups are rarely the first choice for qualitative UX research, but sometimes they are useful.

Focus groups are good at mapping shared vocabulary and social norms, not at determining usability or navigating sensitive topics (where performance and peer pressure distort). If you run one, treat it as reconnaissance.

Moderate actively, curb dominance, invite quiet voices, and route contentious issues to later 1:1 interviews. Look for the words people borrow from each other-contagion is a clue to social meaning.

UXArmy UX research platform allows to conduct focus groups with up to 10 participants and 3 hours of recording duration. Moderators and hidden observers are also supported.

Surveys for qualitative insight

Surveys are usually understood as quantitative. This section is about qual-leaning surveys that add “why” at scale: open-ended prompts after a copy test, a little concept reaction with a short free-text field, or a comprehension check for consent language.

Target by recent behavior and journey state (“used feature X this week,” “first 7 days,” “renewal month”). Anchor prompts in specific moments (“Think about the last time you…”). Pair key open-ends with a feeling or confidence scale so you can sort. Soft-launch to prune ambiguous items and check break-offs. Build a seed codebook with a second rater; code the rest; surface contradictions and outliers, not just frequencies.

Adaptive AI follow-ups (powerful in the right places)

“Conversational” or adaptive surveys can ask a tailored probe after a respondent’s open-end: “You mentioned confusing-tell me what made it confusing.” They shine when the domain is known (copy comprehension, consent language, simple concept reactions). Qualtrics documents this pattern as “conversational feedback / AI adaptive follow-up,” including administration and privacy notes; you’ll find similar patterns in other tools. Early findings from research groups exploring AI-tailored probes suggest the value is not “more words,” but clearer narratives and fewer ambiguities in what people meant.

Run them like a professional:

- Keep a bank of pre-approved probes per topic; let the model slot specifics from the prior answer rather than improvising anything it wants.

- Cap depth (1–2 follow-ups) and watch break-offs. Adaptive doesn’t mean endless.

- A/B test static vs. adaptive versions to prove that you get richer answers without wrecking completion time.

- Store the conversation tree so every conclusion is auditable-what prompt was shown, what the respondent saw next, and the final verbatim. Qualtrics’ administration docs are useful here because they spell out how the feature is governed inside large organizations.

A light, strategic UXArmy note. In surveys, UXArmy AI follow-up questions can personalize probes so you capture better “why” without inflating study time. Coupled with AI auto-tagging and AI Summaries for open-ends, you can move from raw text to decision-ready patterns quickly-always anchored back to verbatims for credibility.

What not to replace? foundational interviews, contextual work, and anything where nonverbal cues, environment, or rapport carry the meaning.

Synthesis that earns decisions

Clustering ≠ sense-making. Affinity walls, journey stories, and the link from insight => bet remain human work. Use AI to compress volume-draft codes, propose clusters, flag contradictions, generate rival explanations, and assemble audience-specific draft briefs-then you choose what survives. If you’ve ever watched a model produce a plausible but wrong “theme,” you know why NN/g hammers on limits: AI is stochastic and can miss, misinterpret, or manufacture insights if you don’t verify.

A trick that moves rooms: for each recommended option, pair one short clip that makes the risk visceral with one that makes the opportunity feel inevitable. Decisions happen when people can feel consequences-good and bad-without you lecturing.

Reporting & stakeholder storytelling

Stop thinking in terms of deliverables; think in terms of decision theatre. Your “report” can be a 3–4 minute reel of pivotal moments, a one-page problem frame with opportunity size, and a short memo per audience-execs, engineering, CX-that uses the same canonical evidence to answer different questions. AI can help tailor those memos; it cannot decide what to say.

UXmatters calls out a trap we all fall into: shiny tools make it easy to accept what “looks right.” Resist the cognitive shortcut. Cross-check claims against the raw. Link every theme to a clip/quote. Your credibility is the product.

ResearchOps for an AI era

If you’re going to move fast, you need a spine.

Clip-level citation in your repo. Make it trivial to jump from a line in a summary to the exact moment that birthed it. That single habit keeps everyone honest.

An AI-usage note in every study. Where did AI help (prep, moderation, coding, clustering)? Which model? How were outputs verified? Who signed off? When leadership sees your provenance, they stop confusing speed with certainty.

Policy guardrails. Approved tools/models, PII handling and redaction, retention windows, and escalation paths. Survey platforms now publish AI administration guidance because large organizations need clarity on where third-party AI is used and how it’s controlled-adopt the same stance for your stack.

Ethics & Responsible AI

Whenever AI touches participant data-or appears in your product-researchers become the early-warning system. Three habits help:

- AI-aware consent. Tell people what tools you’ll use, where their data flows, how long you’ll keep it, and how to opt out-in plain language. Many enterprise guides now provide sample copy for this; adapt them.

- Vulnerable-user protocols. Decide in advance how you’ll handle distress, power asymmetry, or sensitive disclosures. In AI-moderated contexts, define automatic escalation to a human. NN/g’s caution around AI during participant contact supports this conservative stance.

- Bias and failure-mode notes. When you report, include known model limitations and any bias checks you ran. This is the difference between “interesting” and “responsible.”

There’s a growing research base around the risks of cognitive offloading-outsourcing judgment to automation. Keeping the human edge sharp is part ethics, part craft, and part professional pride.

What changes next and what doesn’t

Expect “conversational feedback” and multi-agent workflows to become normal. Some survey platforms already adapt follow-ups in real time; the next step is tools that not only summarize but propose actions and even trigger tests. That’s powerful-and risky-if it moves faster than your understanding. Take Qualtrics’ own messaging about adaptive follow-ups as a signal: governance and provenance will matter more, not less, as these systems get ambitious.

What won’t change is the heart of qualitative work: contact with real people, ethical judgment, and the craft of turning messy reality into decisions that improve lives. Nikki Anderson’s line captures the posture researchers should carry into the next few years: AI doesn’t replace researchers-it amplifies them. Use the speed keep the steering wheel.

Experience the power of UXArmy

Join countless professionals in simplifying your user research process and delivering results that matter

Frequently asked questions

Can AI moderate interviews well enough to replace humans?

Not for foundational discovery or sensitive topics. With disclosure, safety rails, calibration against human baselines, and a human overseer, automated interviewers can scale narrow checks (multilingual pulses, late-stage comprehension). Treat them as throughput tools, not substitutes for judgment.

How do I choose the right research method?

Match the method to your goals—use user surveys, in-depth interviews, ethnographic studies, or focus groups, depending on whether you’re exploring user behaviors or validating hypotheses.

What’s the best way to recruit participants?

Recruit true target users or non-users familiar with similar tools—to uncover diverse usability challenges. Consider working with user research platforms or panel recruitment services to find participants efficiently.

How do I craft the right research questions?

Prepare open-ended, goal-aligned questions—think of Lincoln’s advice: sharpen your “axe” by refining your questions first. Use tools like AI-powered user survey tools to brainstorm or enhance clarity in survey design.

What are the steps in user research?

7 Key steps in the UX research process

Set research goals. The first step is to define your UX research objectives. …

Choose your research methods. …

Recruit your research participants. …

Plan your research session. …

Conduct the research. …

Analyze your research findings. …

Share findings and implement insights.

What are the 5 steps of UX research?

With the theory covered, let’s look at how to conduct user research, step-by-step.

Define the objectives for your research project. …

Identify the target audience to be researched. …

Select the right UX research methods. …

Recruit participants for gathering research findings. …

Choose a tool for conducting user research.

Why should research be done in users’ native language?

People express more vividly in their native language. UX survey tools and user research software that support multi-language input help you gather richer, more authentic insights across regions.

How do I translate research into action?

Synthesize your findings into key themes, and prioritize next steps. Structured tools within a user research platform—like tagging, journey maps, and usability analysis tools—accelerate turning insights into product decisions.

Can a 6-step framework fit agile product teams?

Absolutely. Your six stages understand goal, choose method, recruit, question design, language inclusion, and insight translation align with iterative agile cycles. Pair these with sprint-based usability testing platforms to iterate fast.

Where does AI help most in qualitative work?

Prep, transcription, translation, first-pass coding and clustering, surfacing contradictions, and drafting audience-specific briefs-then humans decide what stands. NN/g recommends heavier use in these phases and caution during direct participant contact.

How do I keep executives from treating AI summaries as “the research”?

Make provenance visible. Every theme links to clips/quotes; recommendations are framed as option => evidence => trade-off. Show the AI-usage note in your repo so no one confuses speed with certainty.